Deploying new IdP code

General Information

A few notes on our deploy process.

Cadence

- Most weeks, we plan to do a full deploy on Tuesday and Thursday.

- We are able to deploy at any time, but off-cycle deploys should be communicated with on-callers if possible

- We default to not deploying if we expect most of the team will be out on the day(s) following. Some examples are:

- Fridays

- Before a holiday

- Before a large part of the team is expected to be on leave

Types of Deploys

All deploys to production require a code reviewer to approve the changes to

the stages/prod branch.

| Type | What | When | Who |

|---|---|---|---|

| Full Deploy | The normal deploy, releases all changes on the main branch to production. |

Twice a week | [@login-deployer][deployer-rotation] |

| Patch Deploy | A deploy that cherry-picks particular changes to be deployed | For urgent bug fixes | The engineer handling the urgent issue |

| Off-Cycle/Mid-Cycle Deploy | Releases all changes on the main branch, sometime during the middle of a sprint |

As needed, or if there are too many changes needed to cleanly cherry-pick as a patch | The engineer that needs the changes deployed |

| Config Recycle | A deploy that just updates configurations, and does not deploy any new code, see config recycle | As needed | The engineer that needs the changes deployed |

| No-Migration Recycle | A deploy that skips migrations, see no-migration recycle | As needed | The engineer that needs the changes deployed |

Communications

Err on the side of overcommunication about planned/unplanned deploys–-make sure to post in the steps in Slack as they are happening and coordinate with @login-appdev-oncall. Most people expect changes deployed on a schedule so early releases can be surprising.

Deploy Guide

This is a guide for the Deployer, the engineer who shepherds code to production for a given release.

When deploying a new release, the Deployer should make sure to deploy new code for the following:

This guide assumes that:

- You have a GPG key set up with GitHub (for signing commits)

- You have set up

aws-vault, and have can SSH (viassm-instance) in to our production environment

Pre-deploy

Check NewRelic

Bring up NewRelic (prod.login.gov) to check that the rate of errors is “typical” of the IdP to ensure that we are in a clean state and ready to deploy. Have NewRelic up throughout the deploy to monitor and watch for issues due to the deploy.

Test the proofing flow in staging

If the version in staging (that would be deployed) has been verified by Team Charity (as reported in the #login-team-charity Slack channel), you can skip this step.

Since identity proofing requires an actual person’s PII, we don’t have a good mechanism for automated testing of the live proofing flow. As a work-around, we test by proofing in staging, then cutting a release from the code deployed to staging. If there are specific commits that need to be deployed, make sure to recycle staging first to include those commits.

Once you’ve run through proofing in staging, the next step is to cut a release from the code that is deployed to staging in main.

Cut a release branch

Prerequisites

The IdP includes a script to create deployment PRs. It relies on gh, the GitHub command line interface. Install that first and authenticate it:

brew install gh

gh auth login

Creating Deploy PRs

Use scripts/create-deploy-pr to create a new deployment PR:

scripts/create-deploy-pr

If you want to create a patch release, specify PATCH=1:

PATCH=1 scripts/create-deploy-pr

This script will create a new RC branch and PR based on the SHA currently deployed to the staging environment. To override this and specify a different SHA or branch, use the SOURCE variable:

PATCH=1 SOURCE=main scripts/create-deploy-pr

create-deploy-pr will print out a link to the new PR located in tmp/.rc-changelog.md. Be sure to verify the generated changelog after creating the PR.

Pull request release

Naming and labeling releases are automatically done in identity-idp after running scripts/create-deploy-pr

- If there are merge conflicts, check out how to resolve merge conflicts.

Share the pull request in #login-appdev

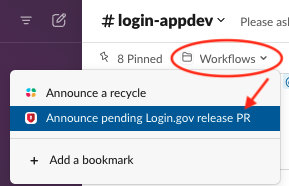

Use the /Announce pending Login.gov release PR workflow in #login-appdev to announce the start of the deployment

- Choose

Identity provider (identity-idp)for the application - Enter the the PR link

- The workflow will send a notification to the

#login-appdevchannel and cross-post to the#login-deliverychannel for awareness.

Resolving merge conflicts

A full release after a patch release often results in merge conflicts. To resolve these automatically, we

create a git commit with an explicit merge strategy to “true-up” with the main branch (replace all changes on

stages/prod with whatever is on main)

cd identity-$REPO

git checkout stages/rc-2020-06-17 # CHANGE THIS DATE

git merge -s ours origin/stages/prod # custom merge strategy

git push -u origin HEAD

The last step may need a force push (add -f). Force-pushing to an RC branch is safe.

Staging

Staging used to be deployed by this process, but this was changed to deploy the main branch to the staging environment every day. See daily deploy schedule for more details.

Production

- Merge the production promotion pull request (NOT a squashed merge, just a normal merge)

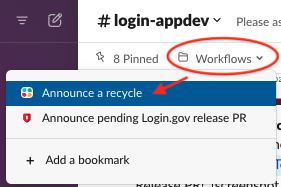

- Use the

/Production App Recycleworkflow in#login-appdevto announce the start of the deployment- Select

Deployingfrom the first dropdown menu - Select

IDPfor Application (should be the default) - Select

Full recyclefor Recycle type (should be the default) - Enter the RC number that will be deployed under

Version or Reason - The workflow will send a notification to the

#login-appdevand#login-devopschannels

- Select

- In the

identity-devopsrepo:cd identity-devops - Ensure you have the latest code with a

git pull. - Check current server status, and confirm that there aren’t extra servers running. If there are, scale in old instances before deploying.

aws-vault exec prod-power -- ./bin/ls-servers -e prod --compact aws-vault exec prod-power -- ./bin/asg-size prod idp - Recycle the IdP instances to get the new code. It automatically creates a new migration instance first.

aws-vault exec prod-power -- ./bin/asg-recycle prod idp- Be sure to post the estimated time of completion to the recycle announcment thread. This should be part of the recycle command output and it will allow others to know when the recycle should complete by. Example of completion message should look like the following (with different dates and times):

Recycling prod-idp: Will increase to 24 instances in 300s (at 2026-02-04 08:27:58) Will return to 12 instances in 900s (at 2026-02-04 08:37:58) - Follow the progress of the migrations, ensure that they are working properly

# may need to wait a few minutes after the recycle aws-vault exec prod-power -- ./bin/ssm-instance --document tail-cw --newest asg-prod-migrationCheck the log output to make sure that

db:migrateruns cleanly. Check forAll done! provision.sh finished for identity-devopswhich indicates everything has runOnce the migration instance spin up is complete, Ctrl-C out of the tail command and run the same for the now starting idp instances:

aws-vault exec prod-power -- ./bin/ssm-instance --document tail-cw --newest asg-prod-idpView multi-step manual instructions to tail logs

aws-vault exec prod-power -- ./bin/ssm-instance --newest asg-prod-migrationOn the remote box

tail -f /var/log/cloud-init-output.log # OR tail -f /var/log/syslog -

Follow the progress of the IdP hosts spinning up

aws-vault exec prod-power -- ./bin/ls-servers -e prod -r idp # check the load balance pool health - Manual Inspection

- Check NewRelic (prod.login.gov) for errors

- Optionally, use the deploy monitoring script to compare error rates and success rates for critical flows

aws-vault exec prod-power -- ./bin/monitor-deploy prod idp - If you notice any errors that make you worry, roll back the deploy

- Be sure to post the estimated time of completion to the recycle announcment thread. This should be part of the recycle command output and it will allow others to know when the recycle should complete by. Example of completion message should look like the following (with different dates and times):

-

PRODUCTION ONLY: This step is required in production

Production boxes need to be manually marked as safe to remove by scaling down the old instances (one more step that helps us prevent ourselves from accidentally taking production down). You must wait until after the original scale-down delay before running these commands (the time when the recycle command reported that it would return to its normal count of instances, roughly 15 minutes after recycle).

aws-vault exec prod-power -- ./bin/scale-remove-old-instances prod ALL -

Set a timer for one hour, then check NewRelic again for errors.

- If everything looks good, the deploy is complete.

Creating a Release (Production only)

The IdP includes a script to create a release based on a merged pull request. It relies on gh, the GitHub cli. Install that first (brew install gh) and get it connected to the identity-idp repo. Then, run the script to create a release:

scripts/create-release <PR_NUMBER>

Where <PR_NUMBER> is the number of the merged PR.

Rolling Back

It’s safer to roll back the IdP to a known good state than leave it up in a possibly bad one.

Some criteria for rolling back:

- Is the error visible for users?

- Is the error going to create bad data that could cause future errors?

- Is there a user-facing bug that could confuse users or produce a wrong result?

- Do you need more than 15 minutes to confirm how bad the error is?

If any of these are “yes”, roll back. See more criteria at https://outage.party/. Staging is a pretty good match for production, so you should be able to fix and verify the bug in staging, where it won’t affect end users.

Scaling Out

To quickly remove new servers and leave old servers up:

aws-vault exec prod-power -- ./bin/scale-remove-new-instances prod ALL

Important:

As soon as possible, ensure that the deploy is rolled back by reverting the stages/prod branch in GitHub by following the steps to roll back below. This is important because new instances can start at any time to accommodate increased traffic, and in response to other recycle operations like configuration changes.

Steps to roll back

-

Make a pull request to the

stages/prodbranch, to revert it back to the last deploy.git checkout stages/prod git pull # make sure you're at the most recent SHA git checkout -b revert-rc-123 # replace with the RC number git revert -m 1 HEAD # assumes that the top commit on stages/prod is a merge -

Open a pull request against

stages/prod, get it approved, and merged. If urgent, get ahold of somebody with admin merge permissions who can override waiting for CI to finish -

Recycle the app to get the new code out there (follow the Production Deploy steps)

-

Schedule a retrospective

Retrospective

If you do end up rolling back a deploy, schedule a blameless retrospective afterwards. These help us think about new checks, guardrails, or monitoring to help ensure smoother deploys in the future.

Config Recycle

A config recycle is an abbreviated “deploy” that deploys the same code, but lets boxes pick up new configurations (config from S3).

-

Make the config changes

- Announce the configuration change in

#login-appdev- Share the diff as a thread comment, omitting any sensitive information

-

Recycle the instances:

aws-vault exec prod-power -- ./bin/asg-recycle prod idp -

In production, it’s important to remember to still scale out old IdP instances. You must wait at least 15 minutes, then run:

aws-vault exec prod-power -- ./bin/scale-remove-old-instances prod ALL

No-Migration Recycle

When responding to a production incident with a config change, or otherwise in a hurry, you might want to recycle without waiting for a migration instance.

- Recycle the instances without a migration instance:

aws-vault exec prod-power -- ./bin/asg-recycle prod idp --skip-migration - In production, remove old IdP instances after a 15 minute waiting period:

aws-vault exec prod-power -- ./bin/scale-remove-old-instances prod ALL

Risks with No-Migrations Recycle

For environments other than prod, note that if a migration has been introduced on main, new instances will fail to start until migrations are run.

Additionally, migration instances are responsible for compiling assets. If assets have changed since the last migration, we recommend against running a no-migration recycle. Otherwise, the new servers will look for fingerprinted asset files that don’t exist, because the migration instances never created them.

To tell if assets have changed since the last migration, inspect the Environments status page. Click “pending changes” and determine if any files changed in app/assets/stylesheets or app/javascript.

Handbook

Handbook